New discoveries #3

Self-Supervised Learning, BioMassters, SEN2VENµS & the Doodleverse

Welcome to the third issue of the satellite-image-deep-learning newsletter! I am excited to share the continued growth in subscriber numbers, which is now at over 1130 subscribers 🎉

Rare Wildlife Recognition with Self-Supervised Representation Learning

Supervised machine learning techniques typically require large amounts of annotated training data. This can be costly to acquire and creates a barrier to the use of these techniques by organisations such as charities. This paper presents a methodology to reduce the amount of annotation required by using self-supervised pre-training on un-annotated data. Figure 2 from the paper (below) shows the basic principle of the pre-training: an encoder network is trained to generate meaningful representations of the image data by using contrastive learning:

In contrastive learning the model learns meaningful representations by maximising the similarity between two randomly augmented views of the same image, and maximising dissimilarity between unmatched pairs of images. This pre-trained model is then fine tuned on annotated images. The paper shows that by using a combination of recent contrastive learning methodologies it is ultimately possible to train high-accuracy models whilst annotating only a fraction of the data, saving time and 💰 Given this obvious benefit I expect this technique to become more mainstream in the future.

Competition: BioMassters 🌳

In order to understand how much carbon a forest can trap, scientists and conservationists seek to quantify the above ground biomass. Compared to alternative techniques, remote sensing methods offer a much faster, less destructive, and more geographically expansive biomass estimate. This competition challenges entrants to estimate the yearly biomass of different sections in Finland's forests using imagery from Sentinel-1 and Sentinel-2, with ground truth from airborne LiDAR surveys and in-situ measurements

💰 $10k prizes

🗓️ Competition End Date: Jan 27, 2023

https://www.drivendata.org/competitions/99/biomass-estimation/

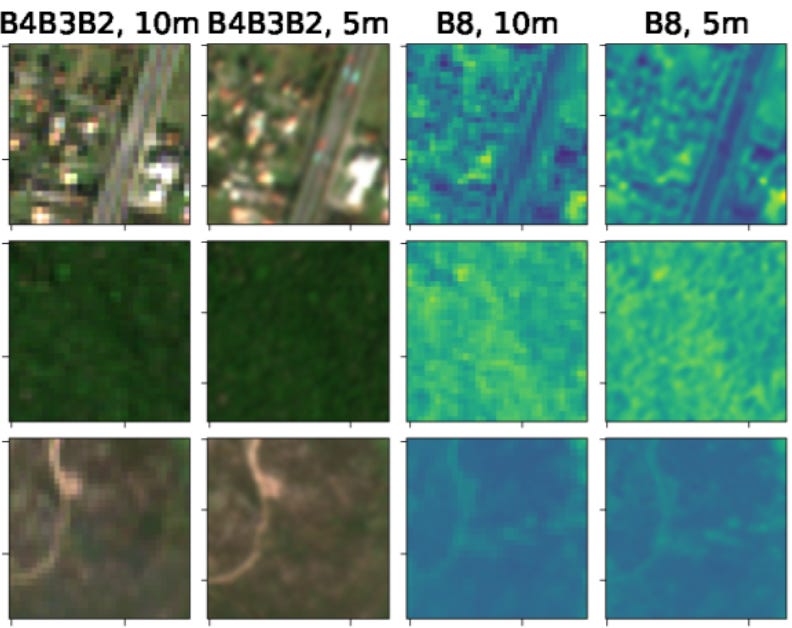

SEN2VENµS: a Dataset for the Training of Sentinel-2 Super-Resolution Algorithms

SEN2VENμS is an open dataset composed of 10 m and 20 m cloud-free Sentinel-2 images with accompanying high resolution references at 5 m resolution acquired on the same day by the VENμS satellite. The dataset covers 29 locations with a total of 132,955 patches of 256×256 pixels at 5 m resolution and can be used for the training and comparison of super-resolution neural networks (these are networks that enhance the resolution of images). Given the widespread use of Sentinel imagery, the development of super resolution networks could have significant benefit for end users:

Video: Satellite image segmentation with Dan Buscombe

In case you missed it earlier this week, in this new video I catch up with Dan Buscombe to discuss his Doodleverse segmentation gym 🏋️♂️

A pytorch baseline solution for biomass https://github.com/fnands/BioMassters_baseline