New discoveries #23

METEOR, Seeing the roads through the trees, A New Learning Paradigm for Foundation Model-based Remote Sensing Change Detection, Globe230k dataset & new group on X/Twitter

Welcome to the 23rd edition of the newsletter, and the first of 2024! I'm delighted to share that the newsletter now has 8,188 subscribers 🔥

METEOR: Meta-learning to address diverse Earth observation problems across resolutions

Unlike traditional learning models that focus on a single task (for example scene classification on a single dataset), meta-learning involves training on a variety of tasks. This diversity helps the model to extract higher-level insights that are common across tasks. METEOR is a new meta-learning methodology for Earth observation problems across different resolutions.

METEOR is a small deep learning meta-model with a single output, that is pre-trained to distinguish one randomly chosen land cover type from others using satellite imagery of the same geographic area (medium-resolution multi-spectral satellite data from Sen12MS). This meta-model is then fine-tuned on different downstream classification tasks involving different numbers of classes, data with different spatial and spectral resolutions, and few annotated samples (as few as 5). This level of generalisability is enabled by combining three techniques:

Instance normalisation is used instead of batch normalisation

The input-layer CNN kernels are dynamically changed to allow for different image resolutions

Uses an ensemble of binary classifiers for tasks with many classes

METEOR demonstrates superior performance over other self-supervised approaches in various downstream tasks, including deforestation detection in 3m RGB+NIR Planet imagery with 10 training examples, and multi-class land cover classification on 10m RGB Sentinel 2 imagery using 1 example per class. Additionally, change detection is demonstrated by training on a small set of pre and post change examples, enabling the identification of the date of a significant change from a long time series of images.

Overall, this is an exciting demonstration of the potential of few-shot learning applied to remote sensing imagery, and indicates the feasibility of tackling tasks where only a few training examples exist. METEOR is released as an open-source, simple, and ready-to-use package in Python. Fine tuning does not require a GPU, and was demonstrated on a 2020 MacBook.

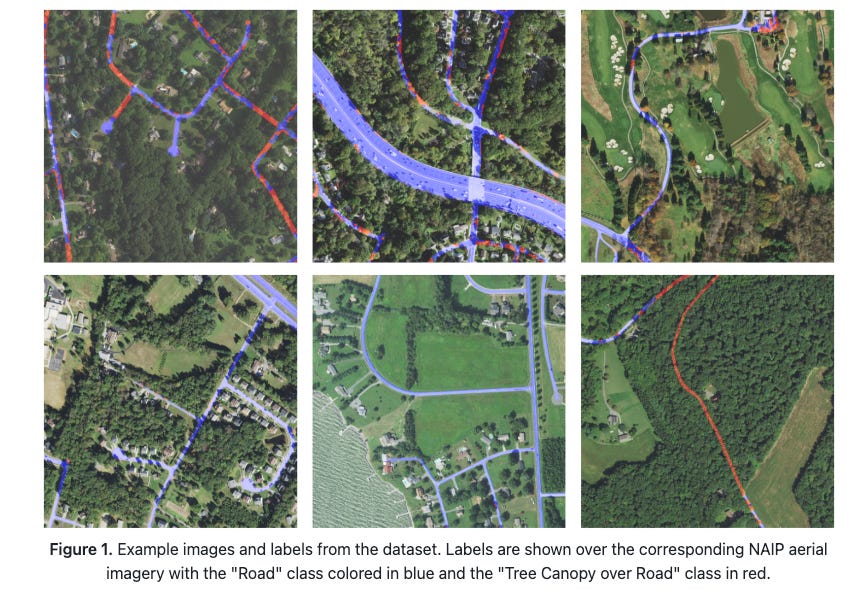

Seeing the roads through the trees: A benchmark for modeling spatial dependencies with aerial imagery

Many vision tasks, particularly in remote sensing, hinge on understanding broad spatial contexts. For example, identifying whether pixels in a remote sensing image represent a road obscured by overhanging trees. This work introduces the 'Chesapeake Roads Spatial Context (RSC)' dataset, which provides a benchmark for evaluating the long-range spatial context comprehension in geospatial machine learning models. It notably highlights the challenges faced by prevalent semantic segmentation models in capturing extensive spatial relationships.

The study reveals how model performance fluctuates as the spatial context relevant to a decision varies in distance. This aspect, previously underexplored in segmentation modeling to my knowledge, has profound implications across a spectrum of applications. Furthermore I am delighted to see the release of this new dataset on Huggingface and accompanying codebase utilising TorchGeo - two emerging pillars of the geospatial ML community.

A New Learning Paradigm for Foundation Model-based Remote Sensing Change Detection

Change detection plays a crucial role in observing and interpreting changes in land cover, a process that is key to understanding various environmental and man-made phenomena. However, the effectiveness of existing deep learning models for change detection is often constrained by the limited scope of knowledge they can derive from the typically small datasets available for training. These datasets, while valuable, do not always provide the comprehensive view needed to fully capture the complexities of land cover changes over time.

This is where the BAN framework comes into play, by leveraging the extensive knowledge base of foundational models. The BAN framework has three core components:

Frozen Foundation Model: This is typically a large-scale, pre-trained model like CLIP, rich in knowledge from its training across diverse datasets. This model forms the knowledge backbone of the framework.

Bi-Temporal Adapter Branch (Bi-TAB): Flexible in its composition, the Bi-TAB can either be an existing CD model or a custom sequence of blocks specifically designed for CD tasks. This component is tailored to handle the unique challenges of change detection.

Bridging Modules: These modules are crucial for harmonising the general-purpose knowledge of the foundational model with the specific demands of CD tasks. They act as conduits, transferring and integrating broad knowledge into the CD-focused Bi-TAB.

What's exciting about the BAN framework is its universality — it's the first of its kind to adapt a general purpose foundational model for change detection tasks. The initial results are promising, with experiments demonstrating up to a 4.08% improvement in Intersection over Union (IoU), a key metric for assessing CD models, with minimal addition of learnable parameters.

This work showcases the untapped potential of foundational models in this area, and demonstrates a future pathway where established models can be enhanced using foundational models.

Globe230k: A Benchmark Dense-Pixel Annotation Dataset for Global Land Cover Mapping

Globe230k is a new large-scale dataset for land cover semantic segmentation. It contains 232k annotated images, each 512x512 pixels with a spatial resolution of 1 meter. It covers 10 primary categories; cropland, forest, grass, shrub, wetland, water, tundra, impervious surfaces, bareland, and ice/snow. Images are sampled from worldwide regions. It contains RGB bands but also includes digital elevation model (DEM) and SAR, which enables multimodal data fusion research.

Backend agnostic deep learning

The recently released Keras 3.0 enables working with multiple back-ends (TensorFlow, PyTorch, JAX), with the possibility of writing platform-agnostic code for designing and training neural networks. The notebook below showcases this by implementing a simple Vision Transformer model using PyTorch for loading and processing the Eurosat dataset, an einops function for converting the images into patches, and JAX code for writing a custom training loop.

💻 Notebook (credit Simone Scardapane)

New group on X/Twitter

I am a user of both LinkedIn & X/Twitter, and there are people on both platforms who regularly post interesting content. After the success of the LinkedIn group (which recently crossed 5,000 members) I have created an equivalent group on X/Twitter. The link is below, I hope you will join!

🖥️ Group page